Button Inputer

I made a thing over the last few days that's changed how I work. I made a push-to-talk button for voice input with both fast ready time and fast local processing. As a maker, I find most of this project as AI slop for the planning, code, and physical design, but I still ended up with a thing that solves a problem in my daily workflow (edit: 2 months later, I still use dozens of times a day) and didn’t know of a better alternative to buy. So I’ll share what I think is interesting about the process to help me think through how I work and what value I add as a maker.

The Need

Like most of my friends who code, I’m doing a lot of ‘vibe coding’ these days. For work, I sometimes create UX prototypes which is a great use of AI for coding - short life-cycle for the code in terms of maintainability, need for quick iteration, high likelyhood of throwing away code after learning about the exerience.

Voice input ends up being great for LLMs with coding because precision isn’t necessary and rambling requirements are actually helpful for the statistical models to do what I mean, not what I say.

I found myself frequently using the voice transcription but often disappointed at how they work to keep me quickly iterating and focused on a problem. To work well, voice input needs to be instantly available when my brain is ready to spew. It needs to be instantly (physically) accessible and minimal effort. My Macbook has a microphone button that even has a pad-printed icon. However it's one of 12 similarly sized buttons in a row and when actually pressing it, is very slow to open the microphone and inconsistent. I regularly have to press multiple times to get the microphone to open. Sometimes the audio earcon is out of synch with the recording. AI interfaces have their own voice input buttons but the interaction varies from product to product. And again, they have latency problems or requires pressing stop to end recording. Like many general purpose products, they're made for occasional use and not for an enterprise-level expectation of reliability that I found I needed.

I wanted an audio input that was lightweight and very fast to agnostically across a range applications on my computer.

First Guess (Proof-of-Concept)

To make something, you need to have initial idea of what you’ll be making - or else you have nothing. I have always started projects with an estimation of how it may work and then adapt that direction as I go along and learn more. For this, I started with an assumption that a push-to-talk button to open/close the microphone for transcription would be useful if fast enough. I knew a microcontroller board could register as a keyboard and figured it could send the keystrokes of the transcription. Also, assumed Whisper running locally could be faster than the cloud-based transcription APIs.

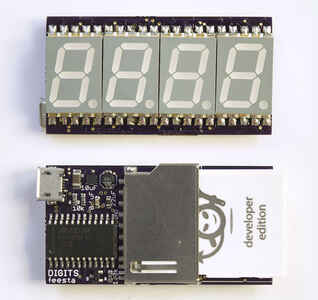

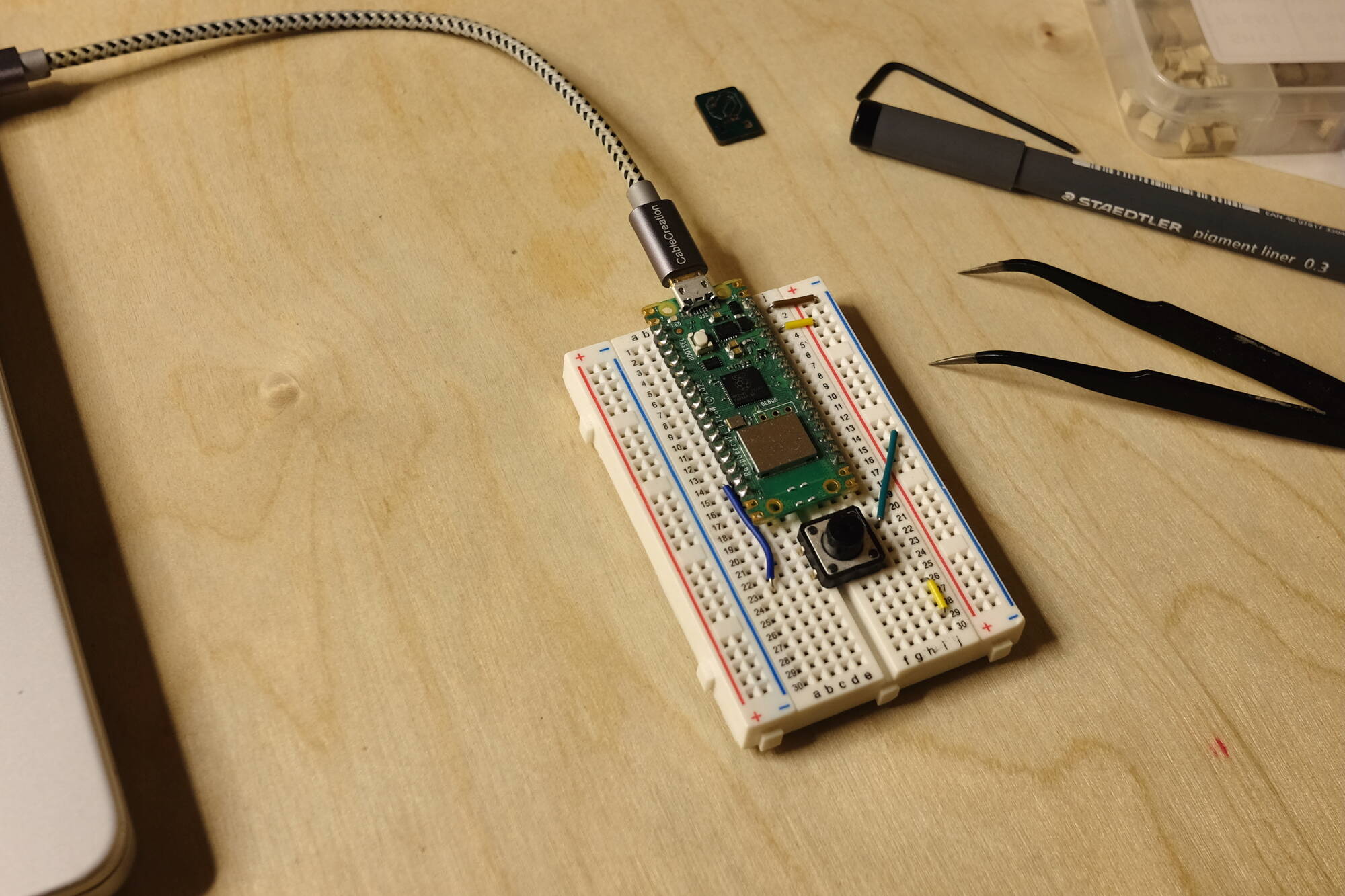

Installing whisper.cpp and test confirmed it could respond in less than a second which seemed fast enough. A quick ChatGPT query filled in some gaps: Hammerspoon to listen for the shortcut, trigger whisper.cpp, and then either paste or text output the results. What else was needed? A button. I used a Raspberry Pi Pico with a button and set it up as an HID USB device that triggers up and down events as F15 button which Hammerspoon would listen for.

All the code was written for me and only had a few issues to work through around how the USB device was configured to also allow me to program it.

Within a day I saw the value and wanted a non-breadboard version.

Post-POC

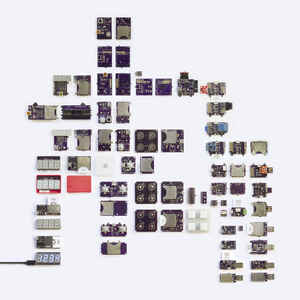

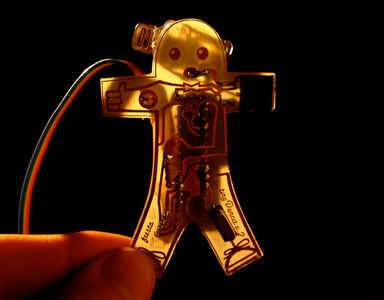

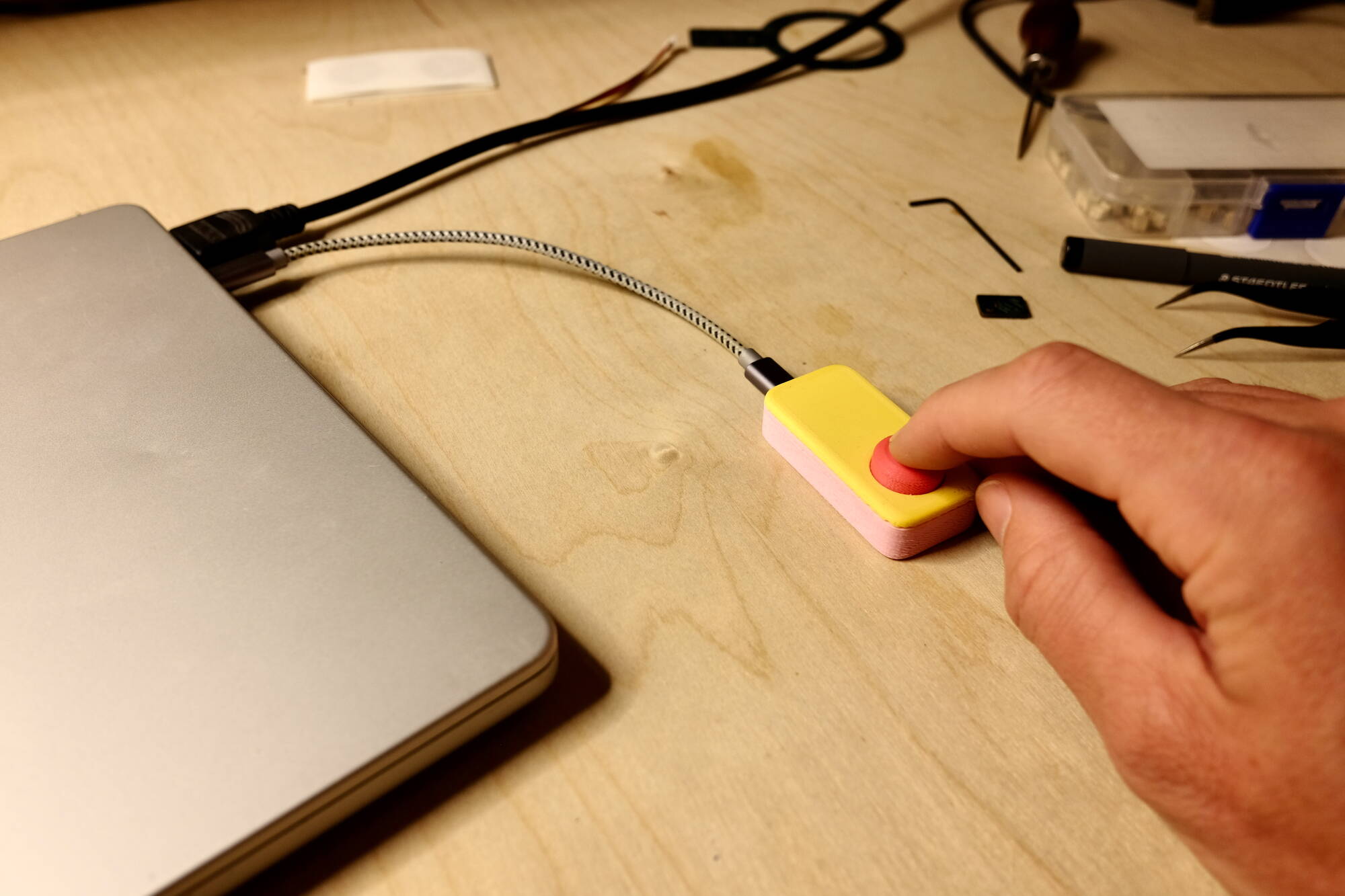

As a maker in the world of AI, I've been spending more time on the 3D aspects since those can't yet be generated for me. I made a tiny PCB in Fusion 360 and fabricated it on my CNC machine. Then, with help of image gen for the ID and an image-to-3D model (Hunyuan3D), I modeled the form and printed it out.

The majority of my time was spent on the areas I have the least experience in but also so key to making something more "real" - tolerances for the plastic parts and getting the button cap to click just right. This is still just a 3D printed part but I've been intrigued by the ability to make useful and durable-enough products as one-offs fabricated at home.

Follow-Up

I've now been using it for a while and keep it plugged into my laptop as I carry it around. I miss it when it's not there. I see a path to pull the Hammerspoon-component into a standalone app that also handles the model install to allow others to use this but I haven't ventured there yet.

Also future looking, I've considered additional uses for it like voice inputs for shortcuts in other software (Fusion 360) or to connect with a local LLM for commanding my computer. My hesitation is I see the simplity of a big red button that does one thing well and diminishing that would make it less useful to me.