Work Notes #2

I'm back after doing some consulting work. I’ve been looking at how a user can create 3D objects with AI.

1: First tried the simple to take 2D images and thicken them. This is sort of cheating but could relate to using a laser cutter or CNC to cut out sheet goods. I set up a prompt to generate 2D images.

Black and white silhouette of a __list of objects__,

Single-shape silhouette art,

Figure-ground silhouette design,

archetype iconography, black and white, simple style

Negative prompt: color, photo, pencil, low quality, bad drawing, extreme, confusing, abstract, tightly cropped, cutoff

And wrote a Python script to cut out the shape and extrude them slightly to make it 3D. This Text-to-3D method is pretty consistent and high resolution but with the obvious limit of being based on a 2D shape. I see it potentially being useful for signage, children's toys, or boardgame parts where simple production is a constraint and a shape abstraction is sufficient.

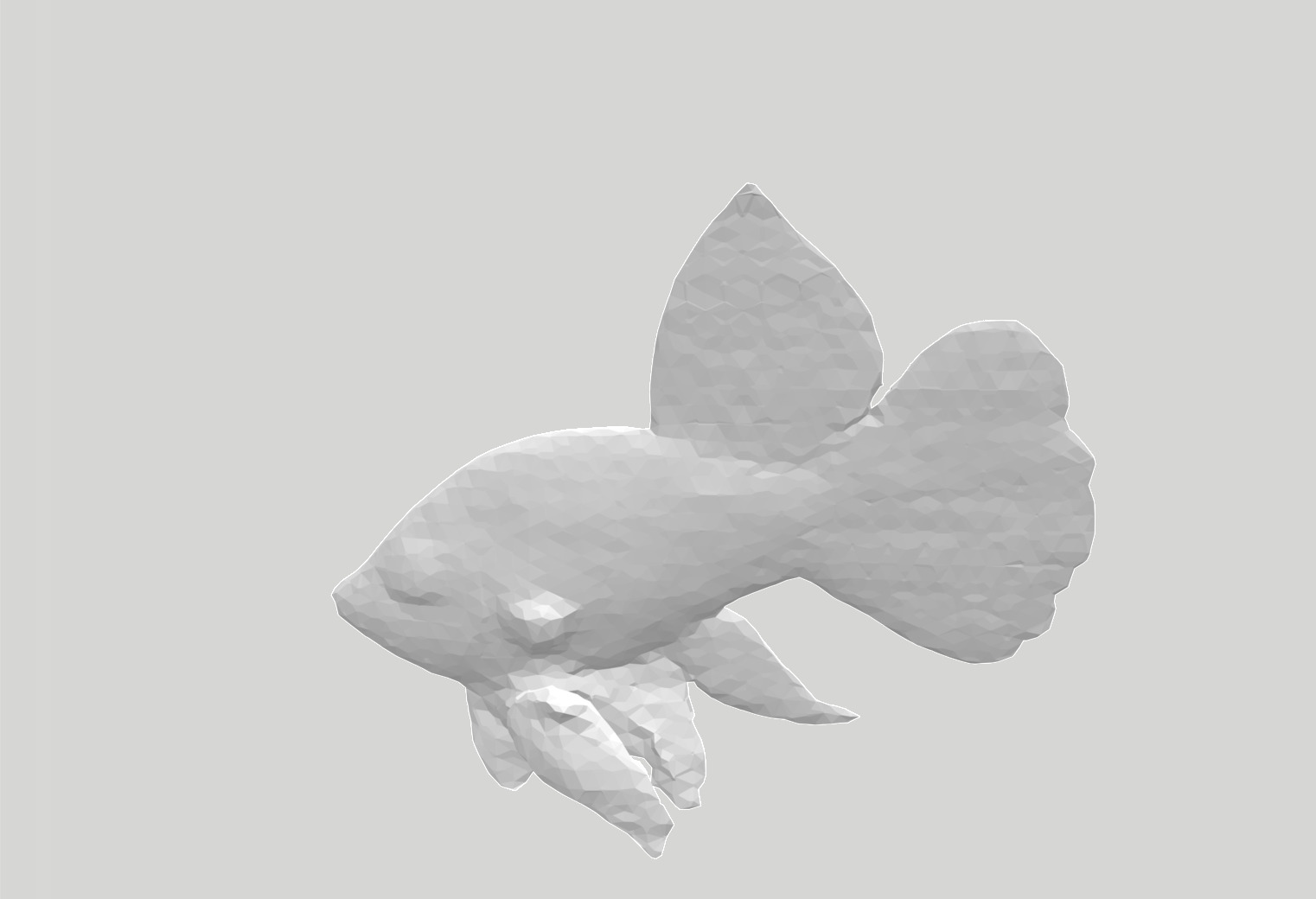

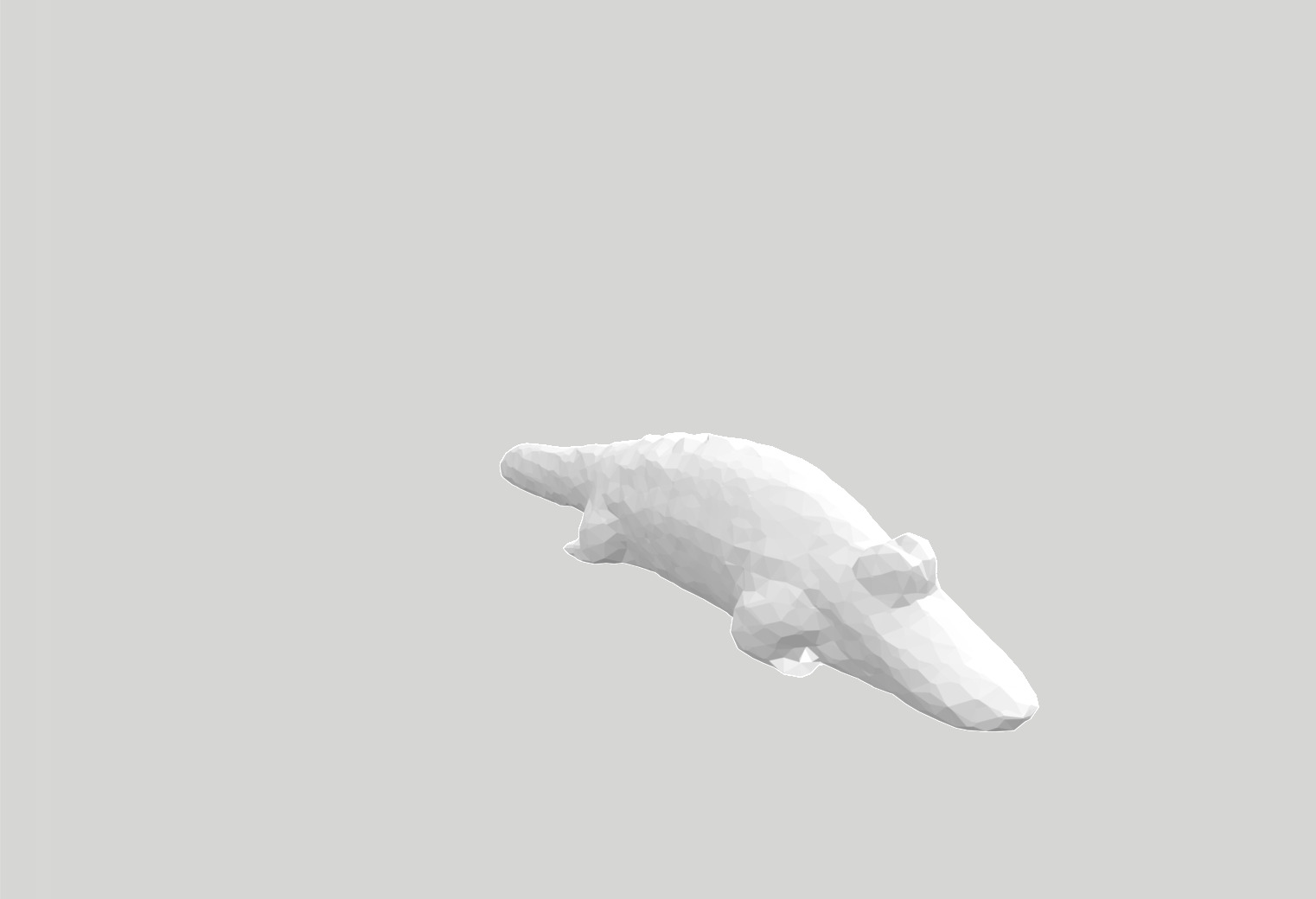

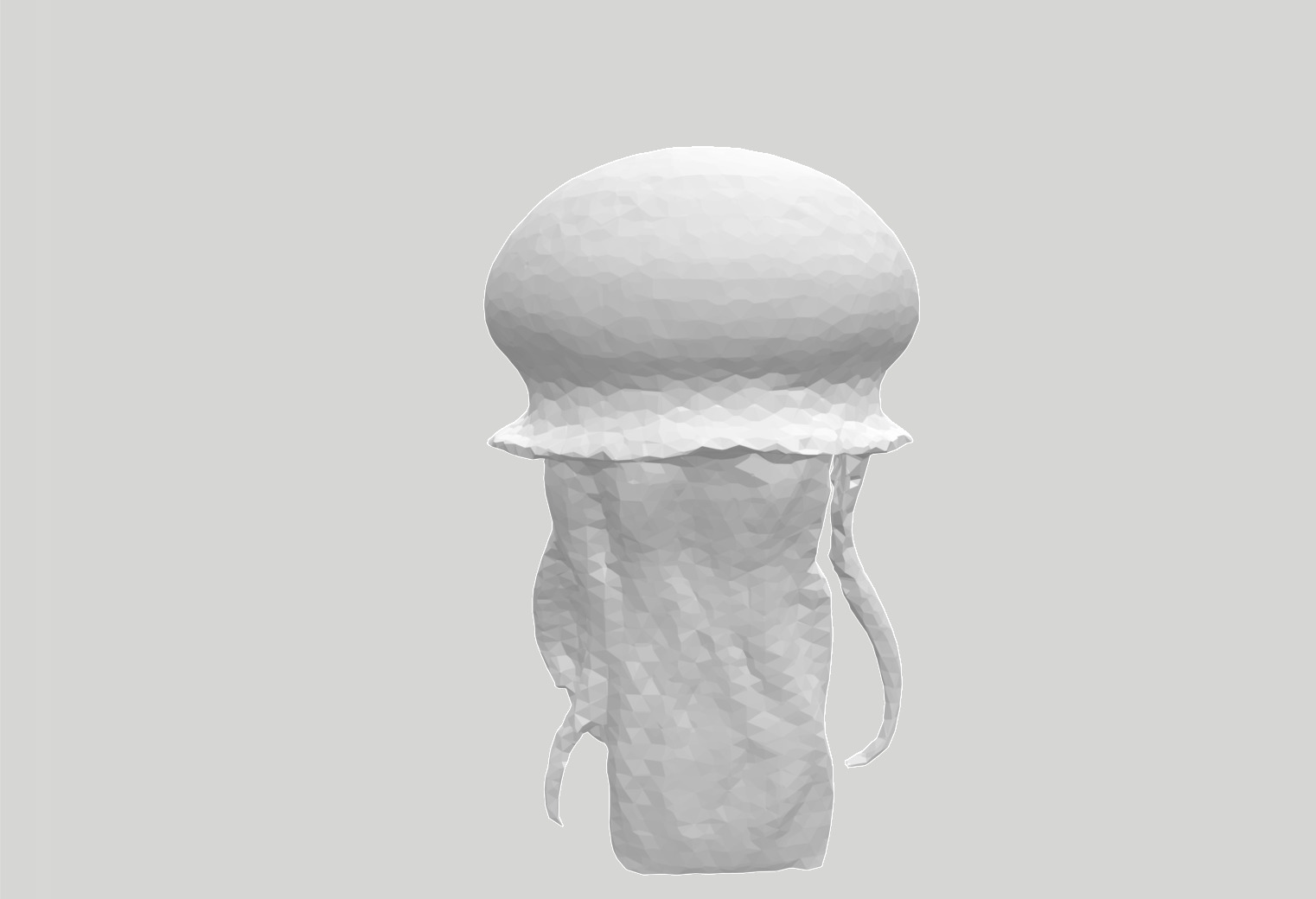

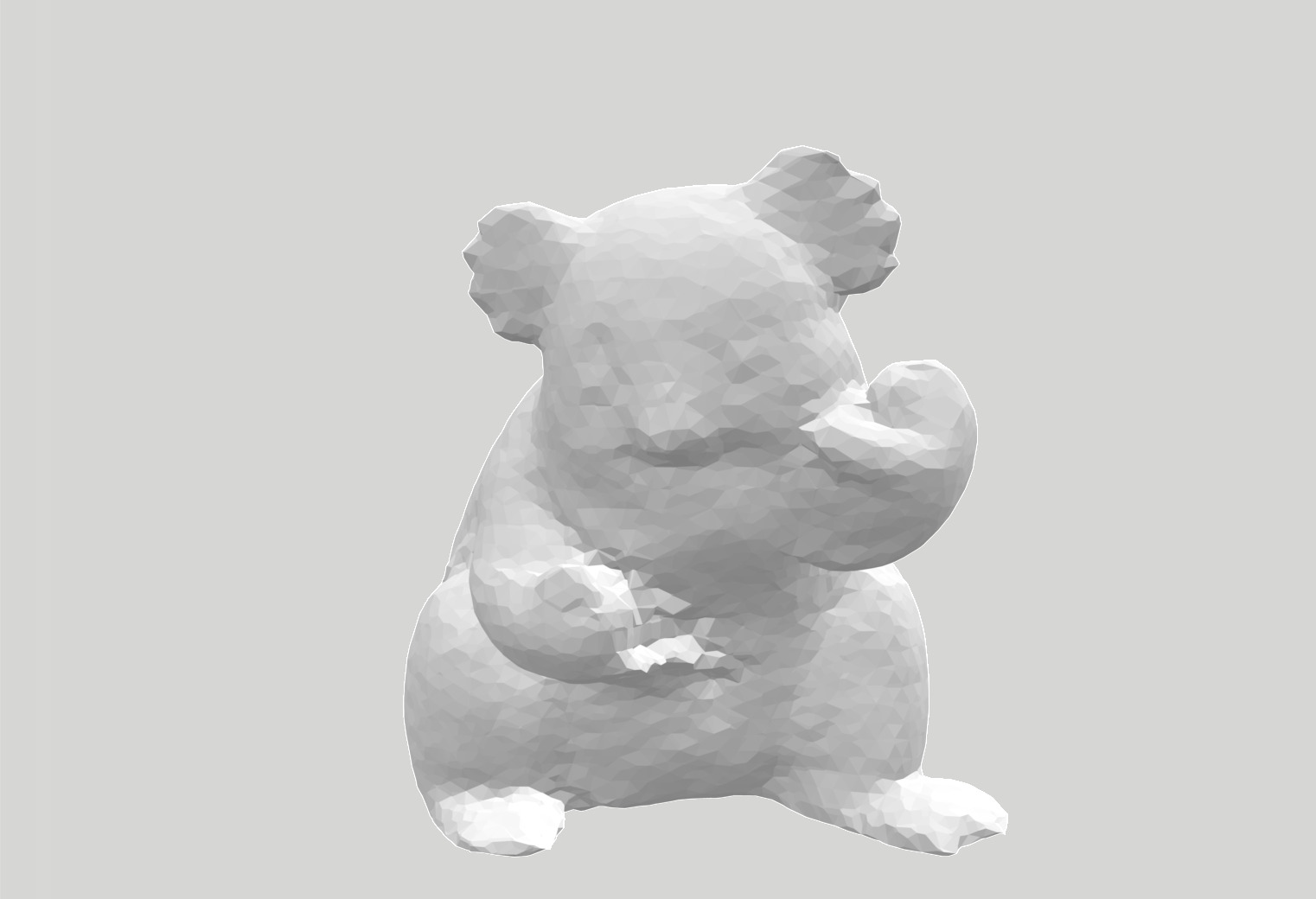

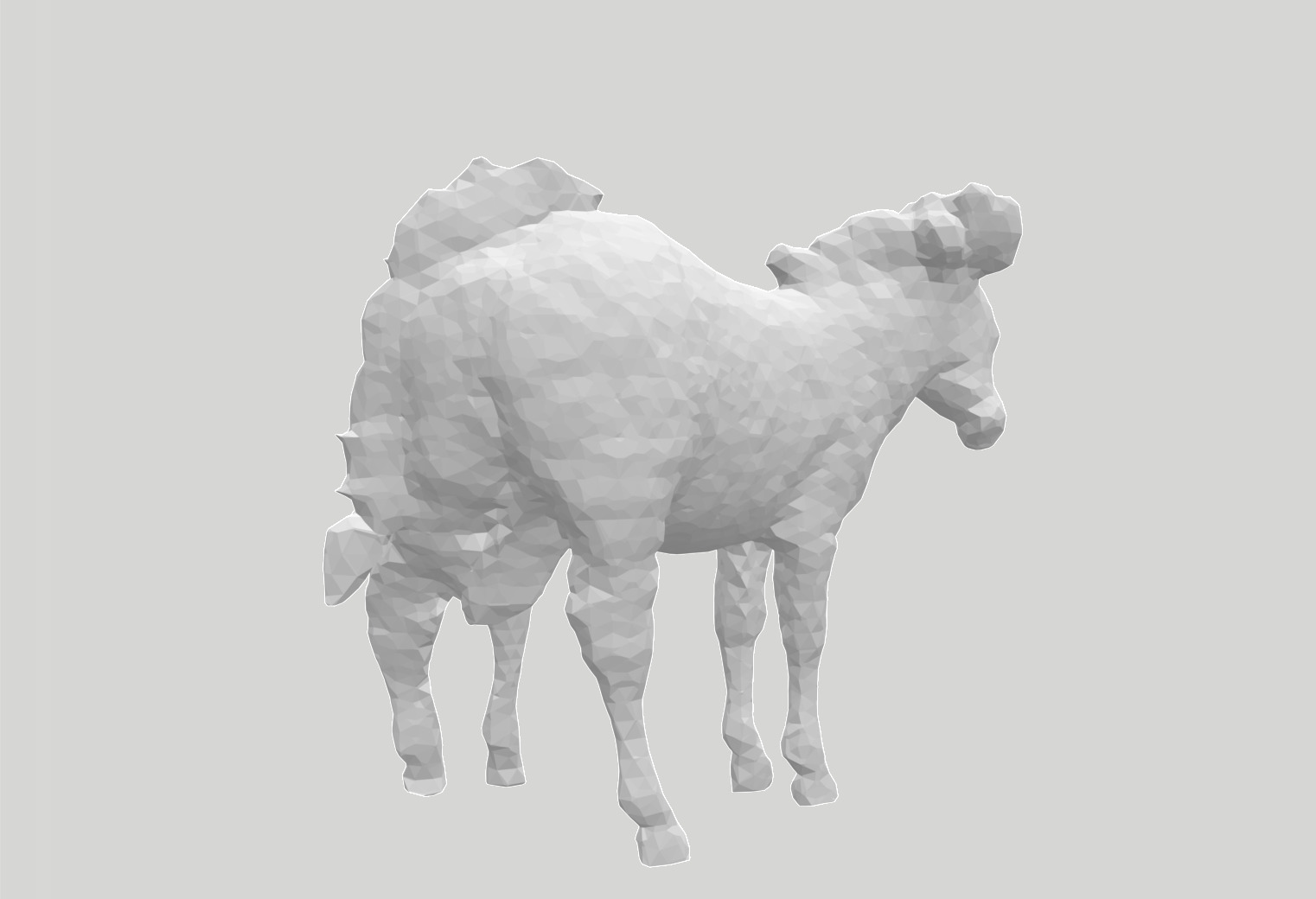

2: I’ve seen generative models for Text-to-3D and Image-to-3D.

The models generated are impressive in that it works but also kind of crap right now. I wondered, given the current state of art, if they might be interesting at scale. Stability AI released an Image-to-3D model Huggingface Github. I set it up to run on Runpod which was straightforward after a few hoops. I used ChatGPT to generate a list of 200 animals. Example:

{Lion | Tiger | Elephant | Giraffe | Zebra | Rhino | Hippo | Cheetah | Leopard | Buffalo | Hyena |

Crocodile | Kangaroo | Koala | Wallaby | Platypus | Echidna | Emu | Ostrich | Penguin | Seal}

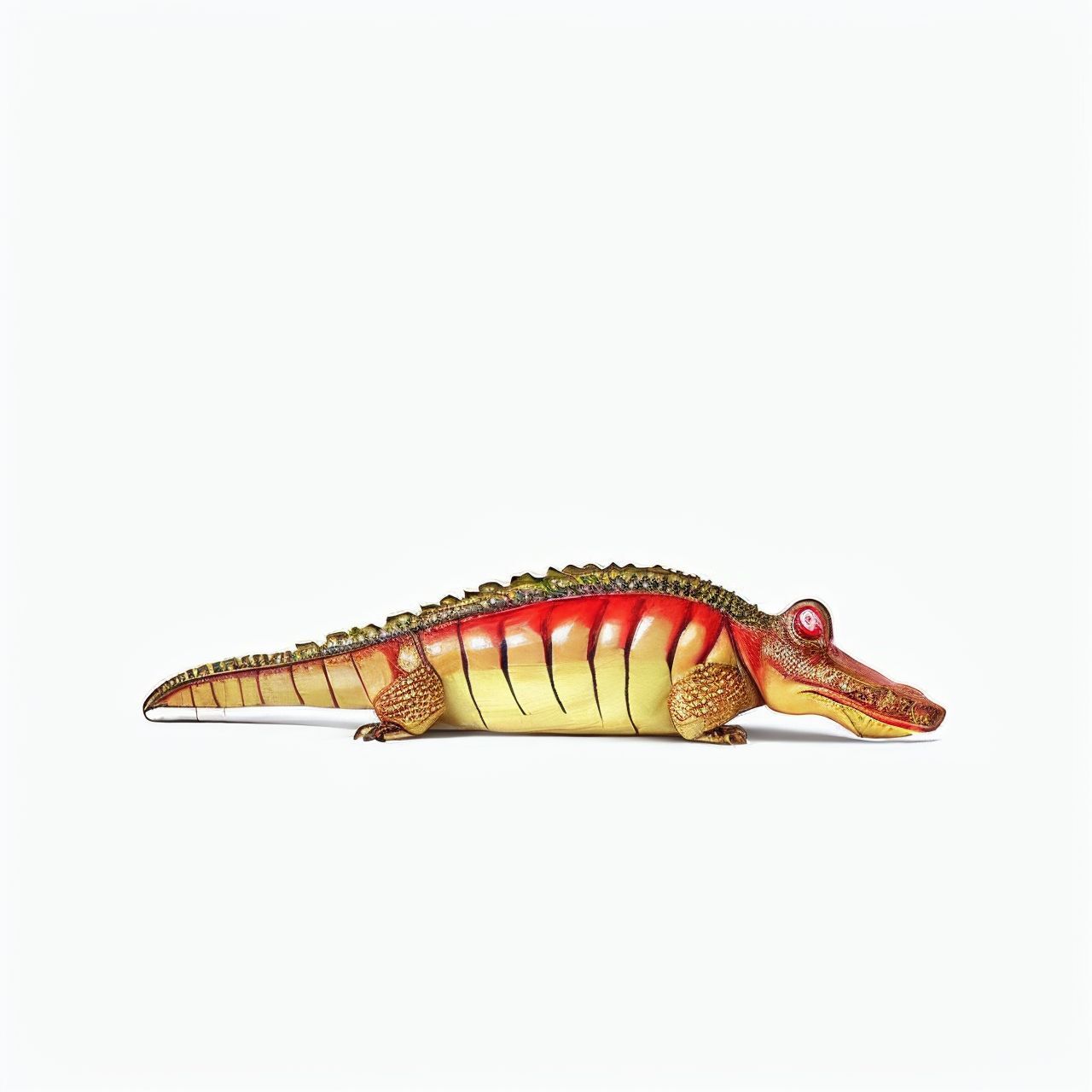

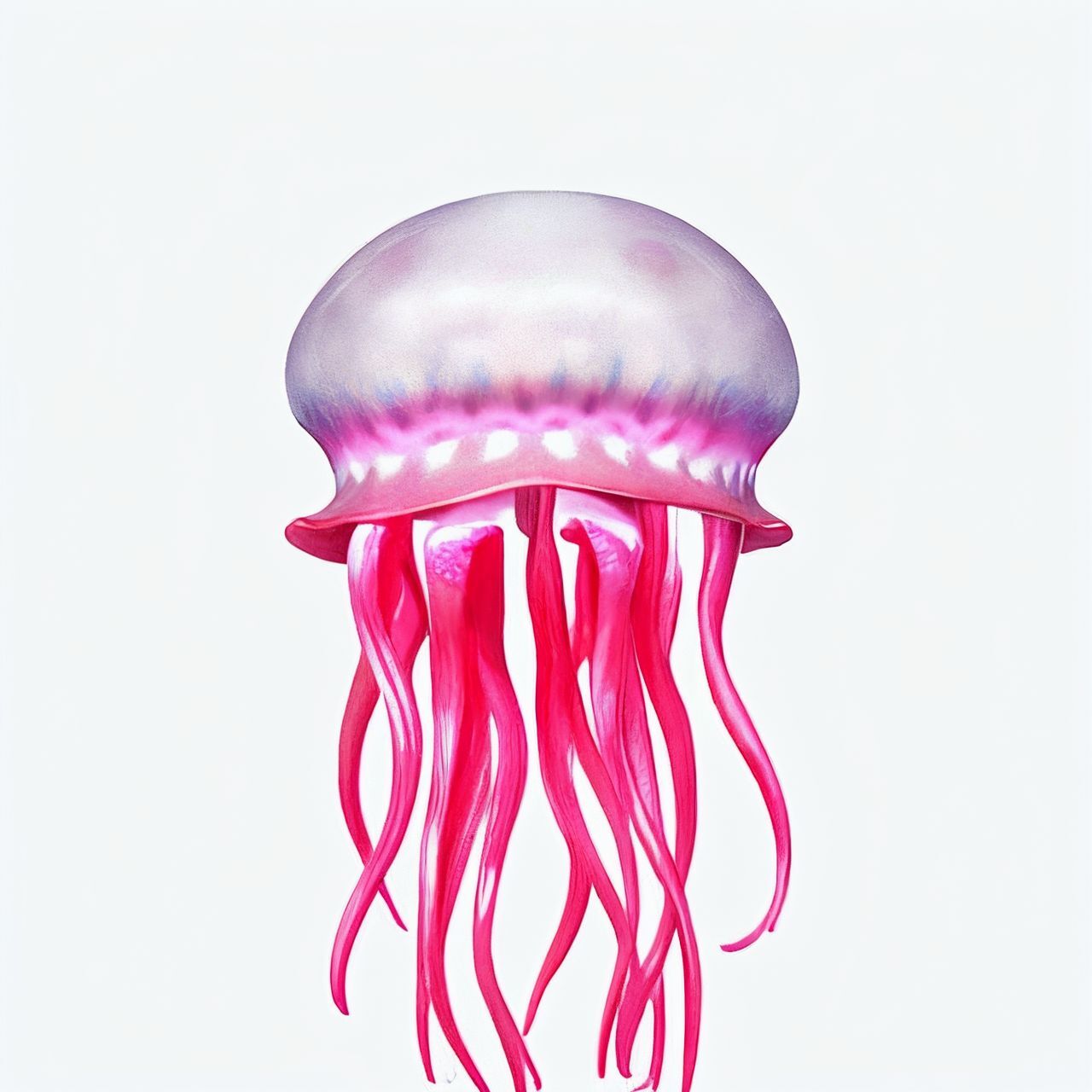

Then used Stable Diffusion to generate illustrations of the animals.

3D CG rendering of a (small:1.2) (cute:0.0) __list of animals__,

from_side, centered, isolated,

(white background, transparent background:1.2), full body, beautiful composition, high resolution

Negative prompt: (vignetting:1.1), (low quality, low resolution:1.2), (cropped, cut-off:1.2), multiple views, multiples, (dark background:1.3), zero margin, (shadows:1.2), (gradient background:1.3), (blurry:1.2), (partially visible:1.2), ground, branch, water, environment, tree, grass, screenshot, gradated lighting, circular base

Finally let it run for a few hours creating >800 images from the list. 3D models generated aren’t great but I had a ton of them.

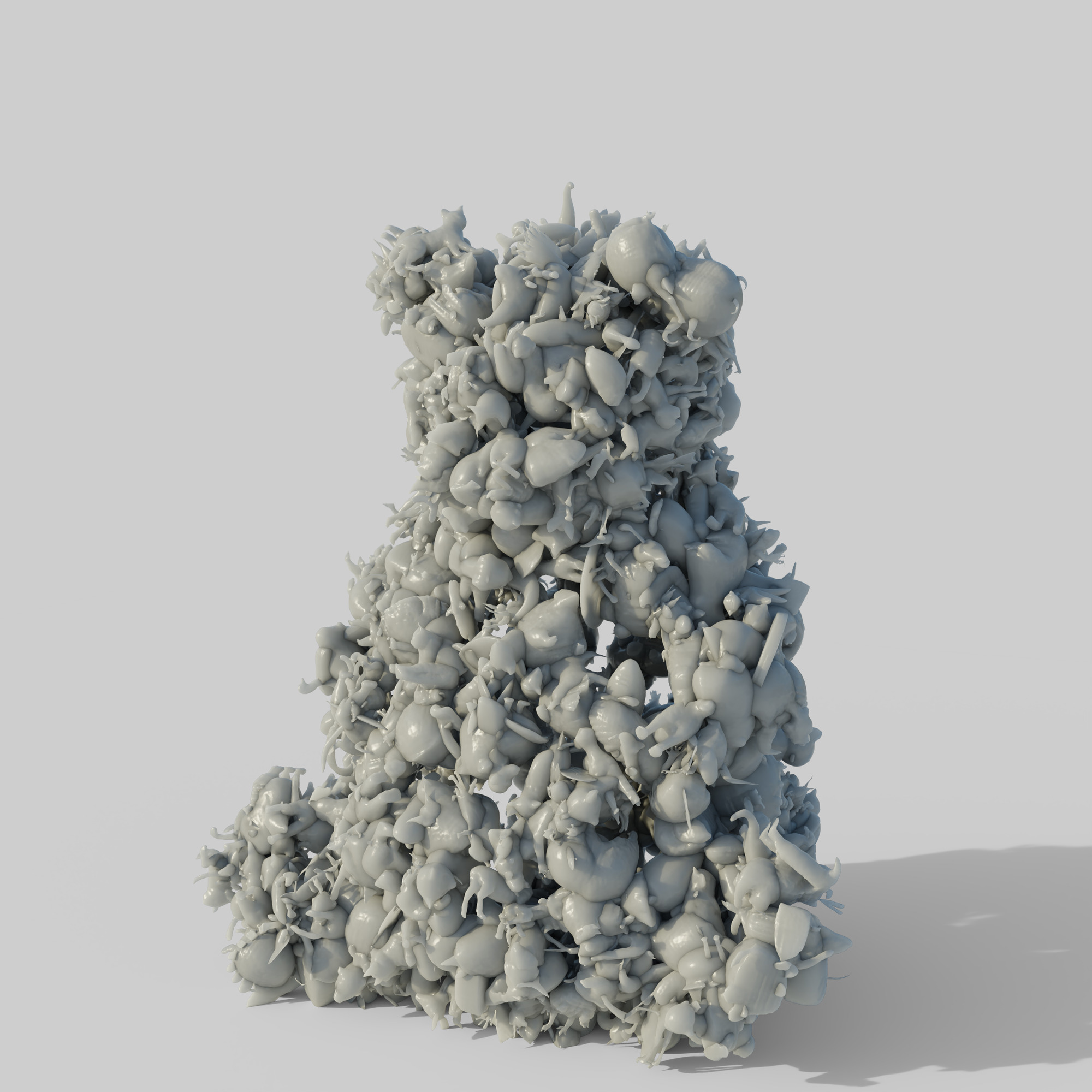

3: I started to merge them together. First tried Blender to use physics modifiers to arrange them. In this case I used objects I'd generated from the news:

Wrote a Python script to randomly place the animal models on a larger model. This was weird and fun.

I haven’t figured out how to print them yet. The support is massive and would leave defects all over. Also they’re solid and with inconsistent geometry so I’ve been unsuccessful hollowing with air holes to release resin. It’s too complicated for my patience to manually do this for each shape.